Shaping Mercedes-Benz’s future driver AI-assistant through user insights

At a Glance

Mercedes-Benz-sponsored research on a proactive AI Assistant that acts autonomously without driver input.

Business Question: Does providing an explanation for an AI's proactive action build user trust and deliver value?

Role: Lead Researcher

Impact: Validated key hypotheses, leading to a scaled study approach in collaboration with Mercedes-Benz and UCL.

Business Challenge

Mercedes-Benz is exploring the future of driving with a proactive AI assistant that anticipates driver needs and takes action without input. Understanding how to build user trust and deliver user value at scale is key to the success of this product. A critical question to address during the early stages of development is whether drivers want explanations for the assistant’s actions. For my master’s degree dissertation, I led a study to answer Mercedes’s questions, generating insights to guide product design and accelerate user adoption.

Research Questions

How well can drivers grasp what triggered a proactive assistant’s action?

How does being informed of the trigger behind a proactive action impact drivers’ experience with the assistant?

Approach

Both quantitative and qualitative methods could have worked for this study. I chose a quantitative approach because it allowed me to compare results between different groups and gain insights that could apply to a larger audience. To do so, a large number of responses is ideal, so I ran a quantitative survey experiment using videos and included a few open-ended questions to capture the context behind the numbers.

141 driver responses

2 use cases tested

Music use case, with explanation (built with Unity)

The use cases are:

Lowering music volume, triggered by a conversation occuring in the car

Navigating to a restaurant, triggered by the time reaching noon

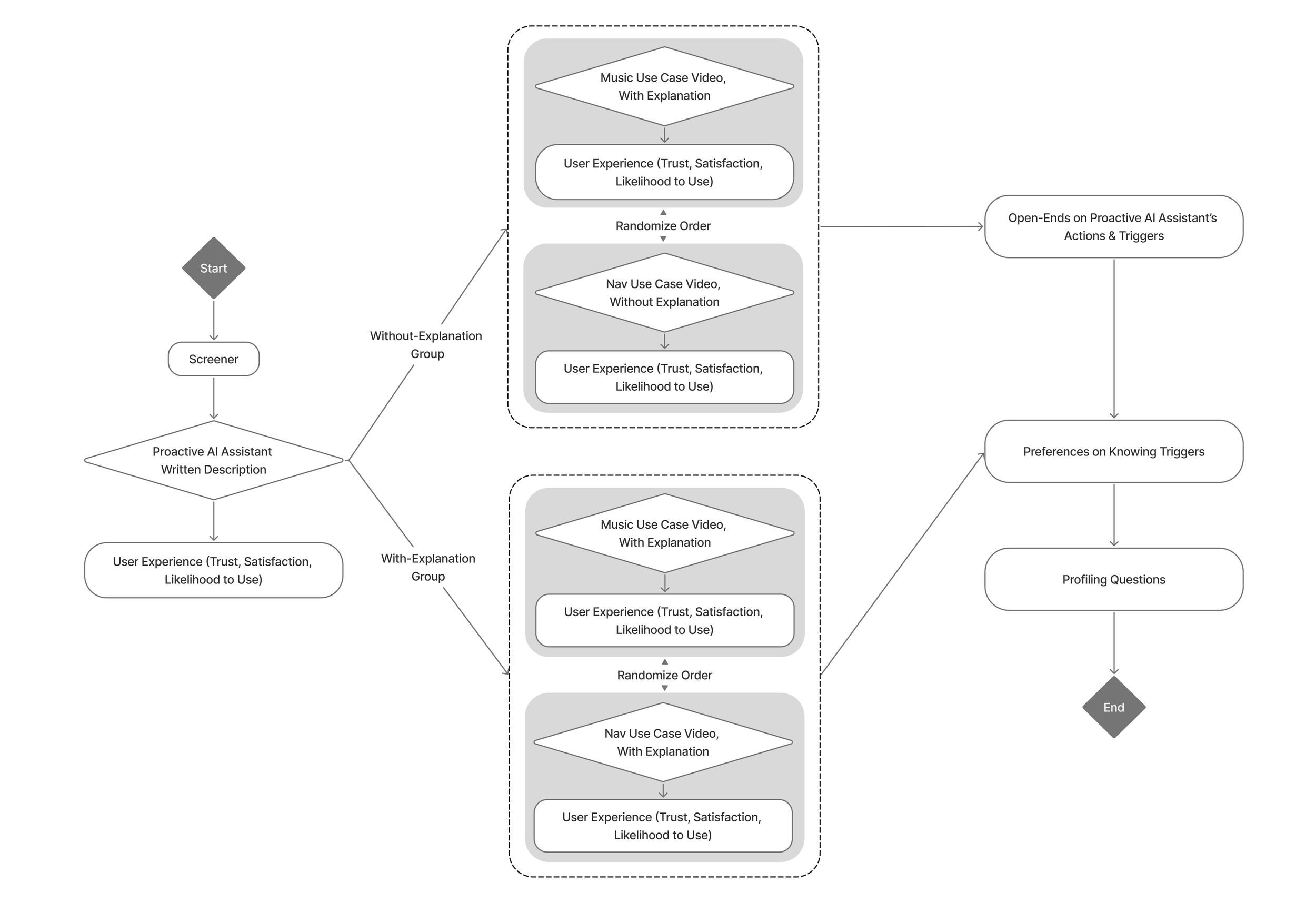

Respondents were split into two groups: one saw videos with explanations of the AI assistant’s triggers, the other without. The “no-explanation” group answered open-ended questions about what they believed triggered each action.

Quantitative results were analyzed for comprehension, trust, and satisfaction, while open-ended responses were coded to understand reasoning.

Navigation use case, with explanation (built with Unity)

Respondent survey flow

Key Learnings

1 |

2 |

Trigger salience affects comprehension. In the music case, 83% identified the trigger (vs. 42% for navigation), likely because the volume change was central and audible, while navigation cues were less visible.

Explanations influence trust differently than expected. Drivers reported slightly better experiences without explanations (+0.27 vs. -0.13 change in trust) but 98% still wanted them. Prior research suggests that explanations that conflict with user expectations can instead harm the experience.

Clear onboarding and tutorials can help drivers establish accurate expectations of how the assistant works prior to use. Explanations should complement, not conflict with, user expectations.

3 |

Finding

Context matters for user acceptability of proactive action. Drivers had a better experience with music (+0.15 vs. -0.17 satisfaction change from baseline) and found it easy to understand, while navigation was harder to interpret and raised concerns about privacy.

Design Implication

Visual and auditory cues should clearly highlight both trigger and action. More salient triggers improve understanding and adoption.

AI proactivity should adapt to context. Some actions may require user confirmation before execution.

Impact

The findings aligned with Mercedes-Benz’s initial hypotheses and led to plans with UCL to scale the study approach across additional automotive use cases.

The research also surfaced how user trust is shaped by the relationship between driving context, system proactivity, and explanation design. These insights are informing a broader workstream focused on predicting when users can understand system triggers independently, and when explanations are necessary to support trust and adoption.

Reflection

For next steps, I would conduct a qualitative follow-up with driving simulator sessions and interviews to observe driver behavior and explore what makes an explanation effective.

From this project, I developed a stronger research approach for AI-powered systems:

Longitudinal methods are essential to understand how users learn and adapt to AI behavior over time.

Testing across multiple contexts is critical, as context strongly influences comprehension, trust, and the need for explanations.